UI / UX Design

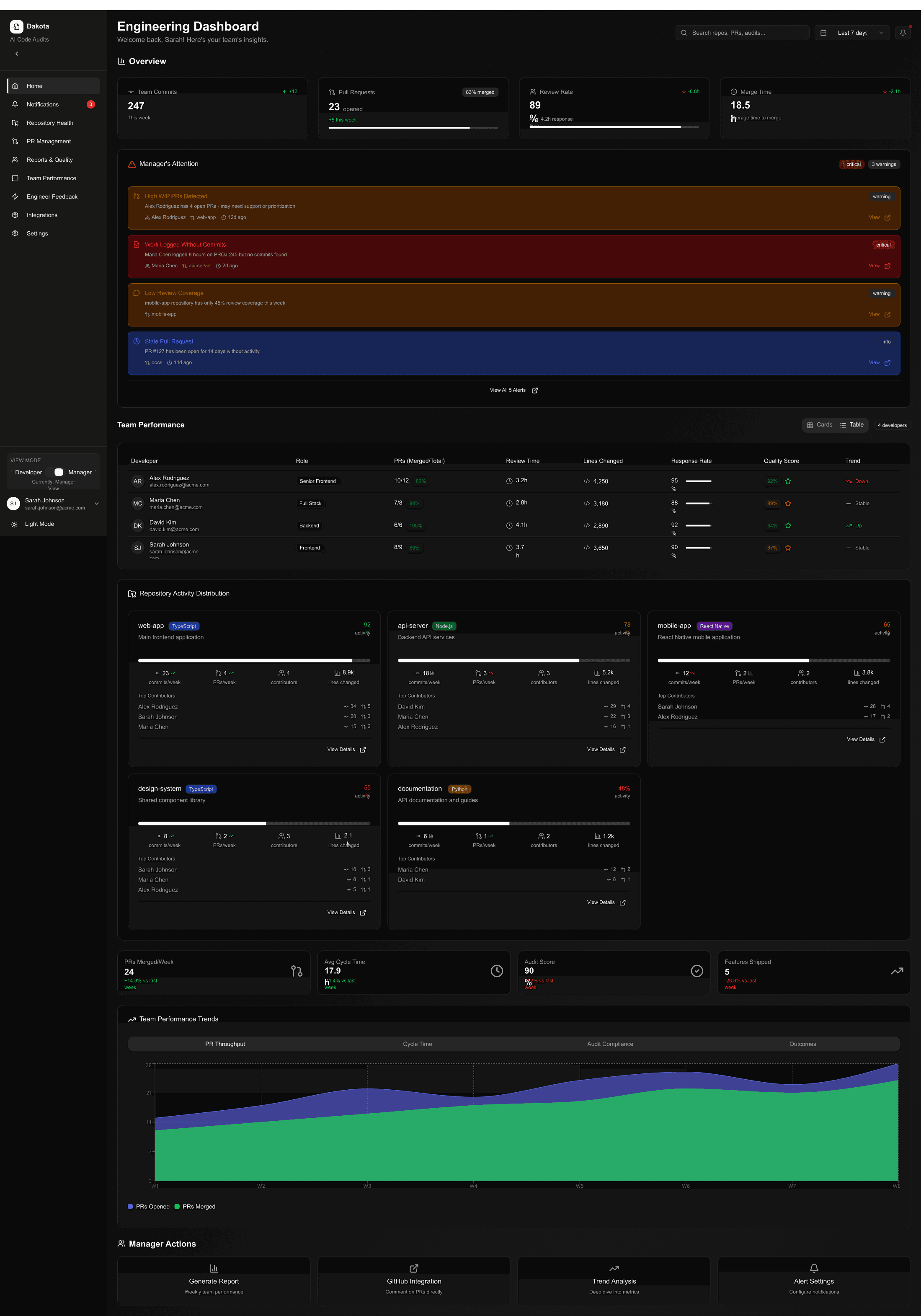

Dakota - AI Code Checker

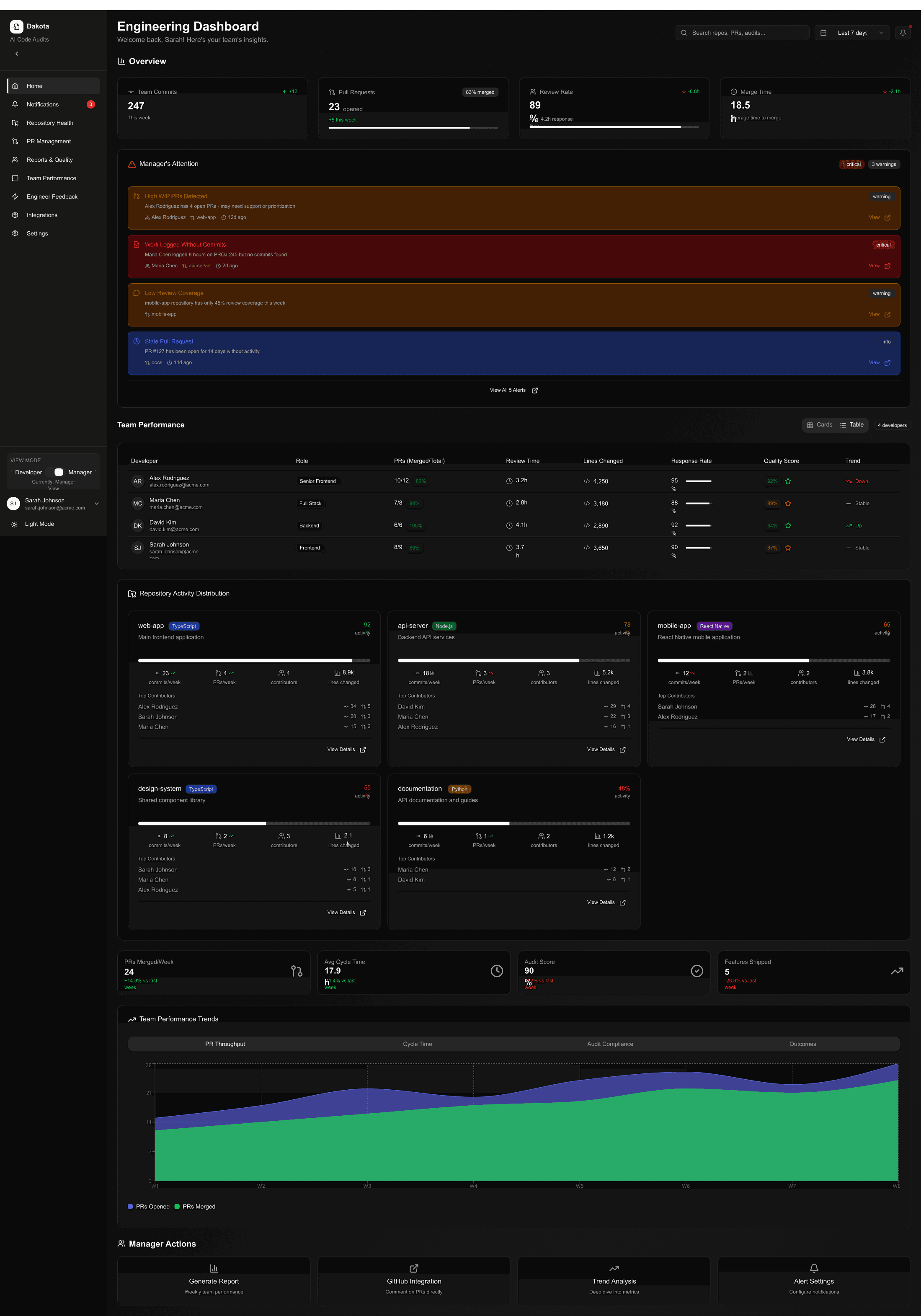

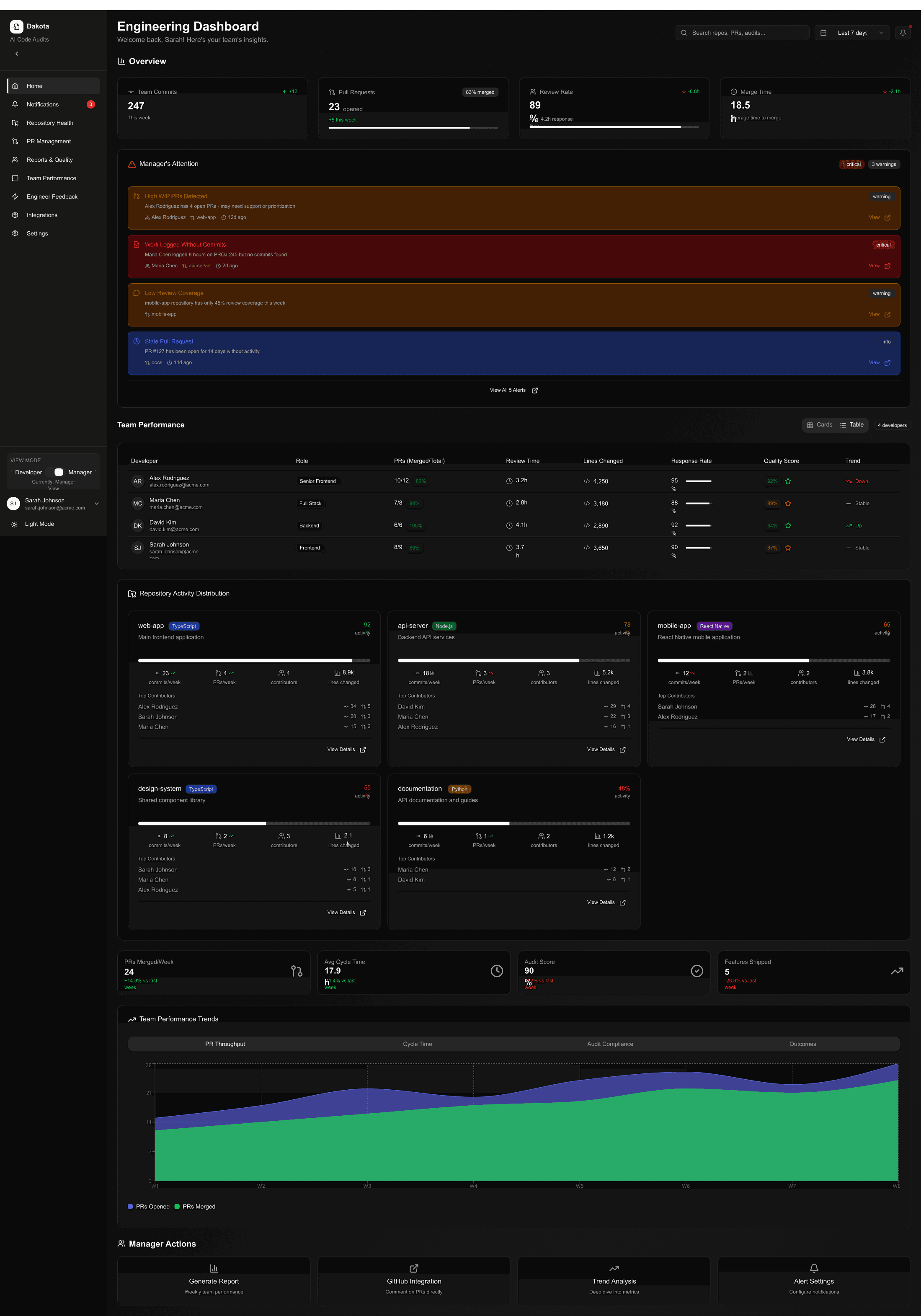

Dakota is an AI-powered code quality checker that analyzes GitHub repositories using large language models. It reviews code structure, best practices, and potential issues, then delivers clear, actionable feedback to managers

Year :

2025

Industry :

Software

Client :

Matt Culteron

Project Duration :

6 weeks

Background :

Dakota is an AI-driven code quality and review platform designed to help engineering managers gain deeper visibility into their teams’ codebases. By securely connecting to GitHub repositories, Dakota automatically analyzes source code using advanced large language models to identify quality issues, inconsistencies, and opportunities for improvement.

Instead of relying solely on manual code reviews, Dakota evaluates code for readability, maintainability, performance risks, and adherence to best practices. The insights are translated into clear, manager-friendly feedback, making it easier for non-technical stakeholders to understand the health of a project and guide teams effectively.

With Dakota, teams can reduce review bottlenecks, improve overall code quality, and ensure consistent standards across projects—while allowing developers to focus on building, not policing code.

How it works :

Dakota is an AI-driven code quality platform designed to bridge this gap. By connecting directly to GitHub repositories, Dakota automatically reviews code using large language models and evaluates it against best practices, readability standards, and maintainability principles.

Once a repository is connected, Dakota scans the codebase and generates structured feedback highlighting:

Code quality and maintainability issues

Inconsistencies and potential risks

Improvement recommendations written in plain, actionable language

Instead of raw technical logs, Dakota presents insights in a manager-friendly format, enabling faster decision-making and more meaningful conversations with development teams.

Challenge :

1. Designing for Mixed Technical Expertise

Dakota is used by both engineering managers and technical leads, each with different levels of coding expertise. One of the key challenges was presenting complex code analysis in a way that is useful for experienced developers while still being understandable and actionable for non-technical managers—without oversimplifying or overwhelming either group.

2. Translating LLM Output into Actionable Insights

Raw AI-generated feedback can be verbose, inconsistent, or overly technical. A major UX challenge was transforming this output into structured, trustworthy insights that feel clear, concise, and human—while maintaining confidence in the accuracy of the analysis.

3. Building Trust in AI Recommendations

Managers are hesitant to act on AI feedback if they don’t understand why a recommendation was made. The challenge was to design explanations and supporting context that increase trust in the system without exposing unnecessary technical complexity or internal AI mechanics.

4. Avoiding Information Overload

Large repositories can generate a high volume of issues. The UX challenge was to prioritize what matters most—surfacing critical problems first, grouping related feedback, and enabling users to quickly scan the overall health of a repository.

Impact :

Dakota reduces the reliance on manual reviews while improving visibility into code health. Managers gain confidence in code quality, developers receive clearer guidance, and teams maintain consistent standards without disrupting their workflow.

UI / UX Design

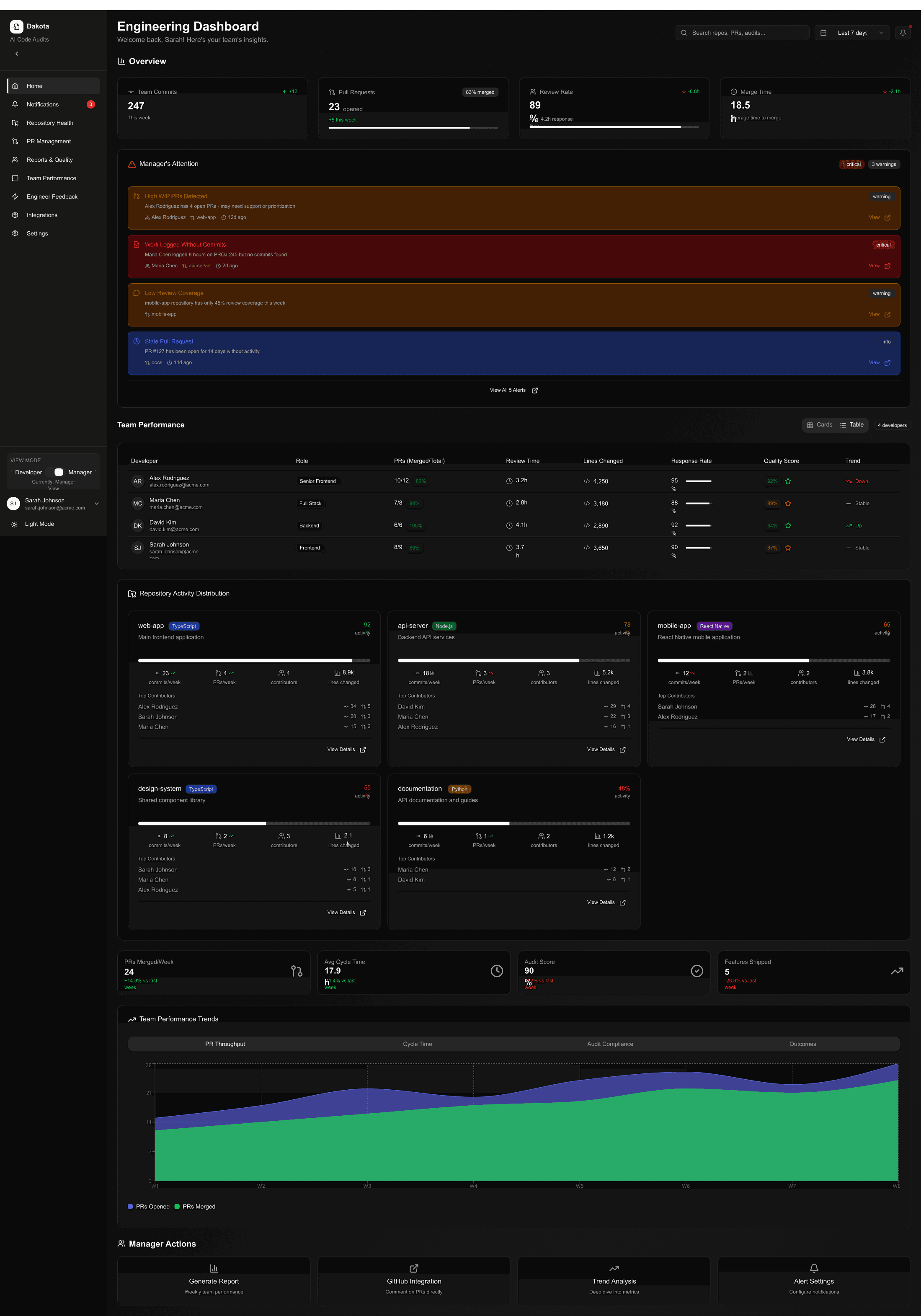

Dakota - AI Code Checker

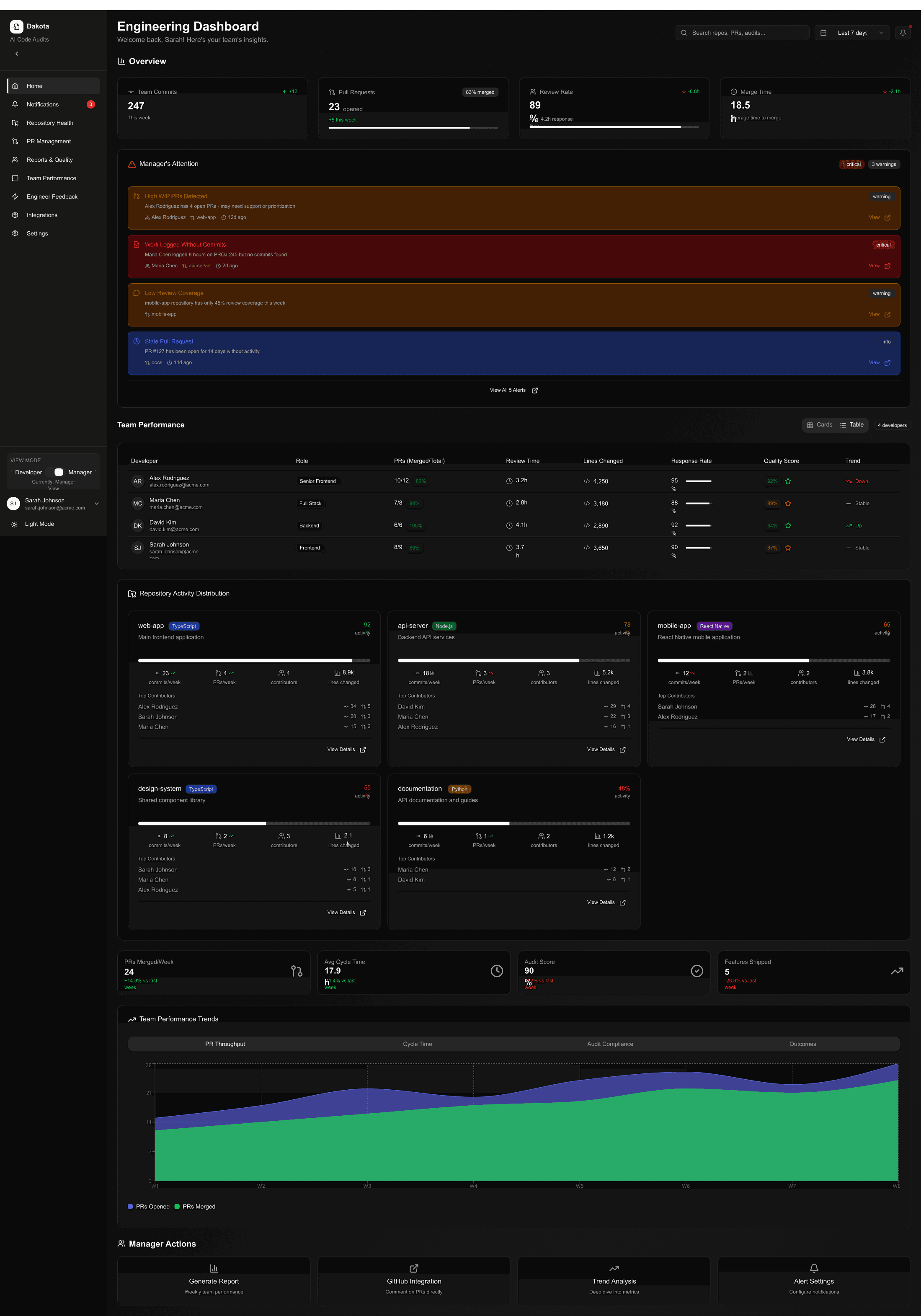

Dakota is an AI-powered code quality checker that analyzes GitHub repositories using large language models. It reviews code structure, best practices, and potential issues, then delivers clear, actionable feedback to managers

Year :

2025

Industry :

Software

Client :

Matt Culteron

Project Duration :

6 weeks

Background :

Dakota is an AI-driven code quality and review platform designed to help engineering managers gain deeper visibility into their teams’ codebases. By securely connecting to GitHub repositories, Dakota automatically analyzes source code using advanced large language models to identify quality issues, inconsistencies, and opportunities for improvement.

Instead of relying solely on manual code reviews, Dakota evaluates code for readability, maintainability, performance risks, and adherence to best practices. The insights are translated into clear, manager-friendly feedback, making it easier for non-technical stakeholders to understand the health of a project and guide teams effectively.

With Dakota, teams can reduce review bottlenecks, improve overall code quality, and ensure consistent standards across projects—while allowing developers to focus on building, not policing code.

How it works :

Dakota is an AI-driven code quality platform designed to bridge this gap. By connecting directly to GitHub repositories, Dakota automatically reviews code using large language models and evaluates it against best practices, readability standards, and maintainability principles.

Once a repository is connected, Dakota scans the codebase and generates structured feedback highlighting:

Code quality and maintainability issues

Inconsistencies and potential risks

Improvement recommendations written in plain, actionable language

Instead of raw technical logs, Dakota presents insights in a manager-friendly format, enabling faster decision-making and more meaningful conversations with development teams.

Challenge :

1. Designing for Mixed Technical Expertise

Dakota is used by both engineering managers and technical leads, each with different levels of coding expertise. One of the key challenges was presenting complex code analysis in a way that is useful for experienced developers while still being understandable and actionable for non-technical managers—without oversimplifying or overwhelming either group.

2. Translating LLM Output into Actionable Insights

Raw AI-generated feedback can be verbose, inconsistent, or overly technical. A major UX challenge was transforming this output into structured, trustworthy insights that feel clear, concise, and human—while maintaining confidence in the accuracy of the analysis.

3. Building Trust in AI Recommendations

Managers are hesitant to act on AI feedback if they don’t understand why a recommendation was made. The challenge was to design explanations and supporting context that increase trust in the system without exposing unnecessary technical complexity or internal AI mechanics.

4. Avoiding Information Overload

Large repositories can generate a high volume of issues. The UX challenge was to prioritize what matters most—surfacing critical problems first, grouping related feedback, and enabling users to quickly scan the overall health of a repository.

Impact :

Dakota reduces the reliance on manual reviews while improving visibility into code health. Managers gain confidence in code quality, developers receive clearer guidance, and teams maintain consistent standards without disrupting their workflow.

UI / UX Design

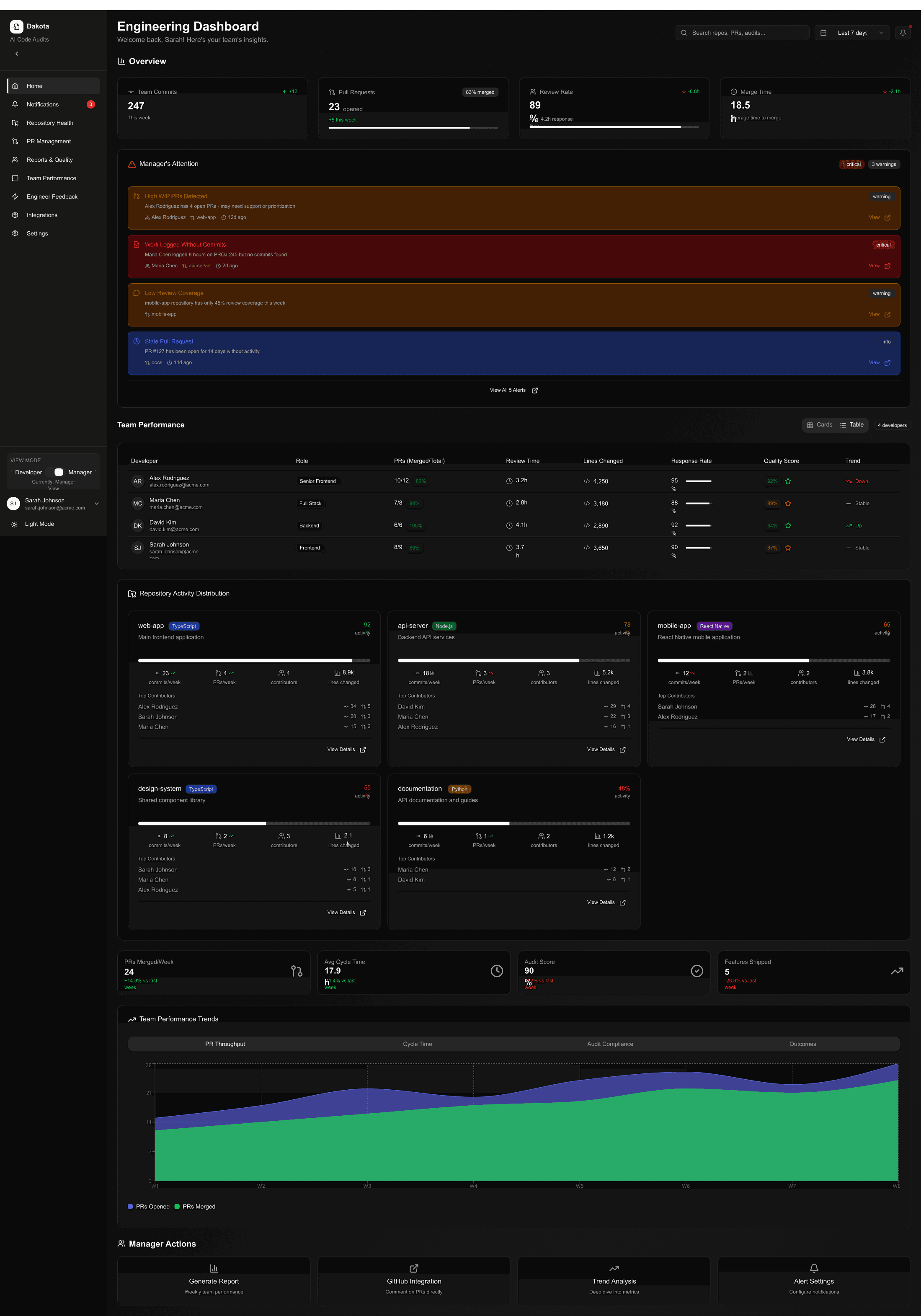

Dakota - AI Code Checker

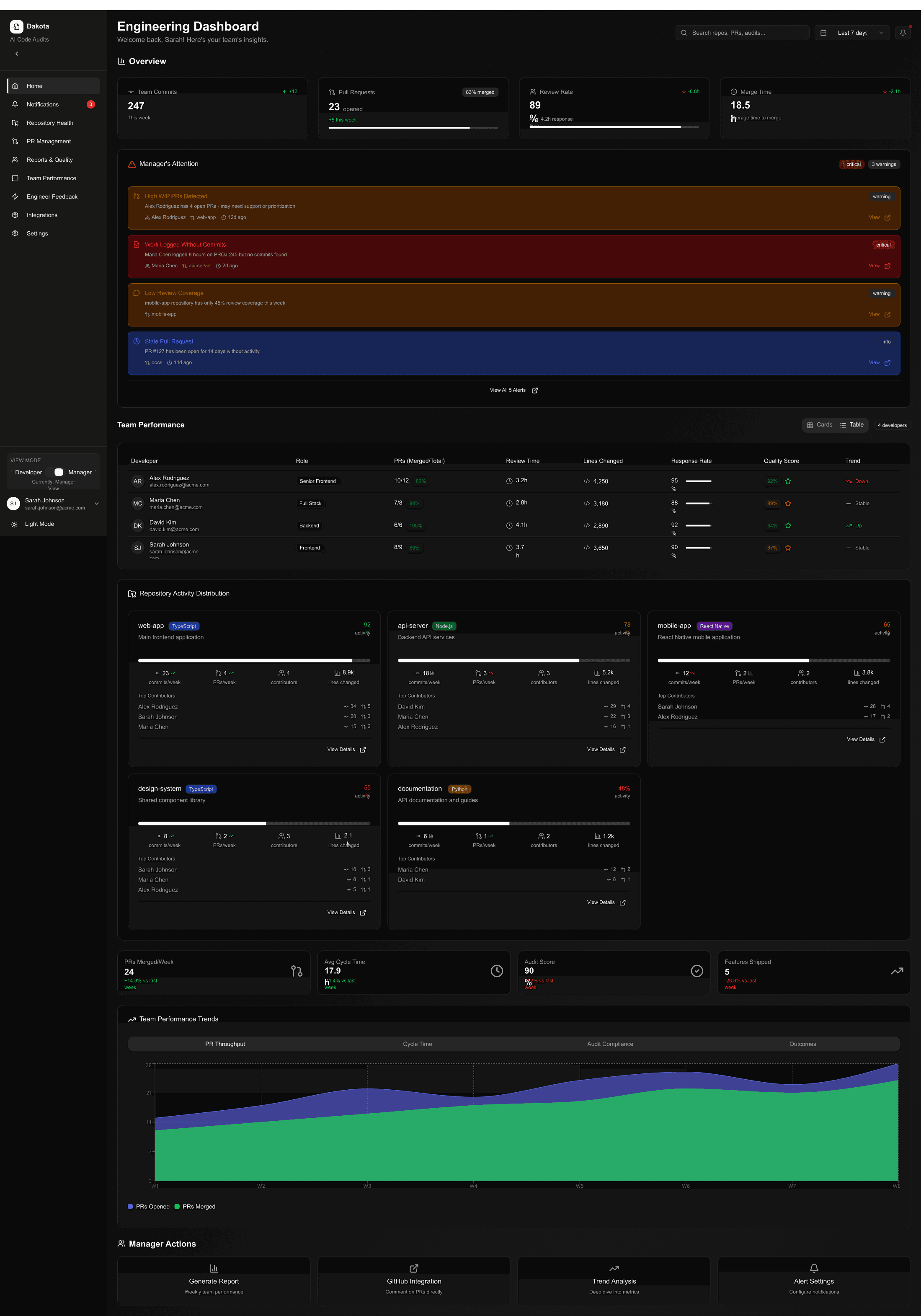

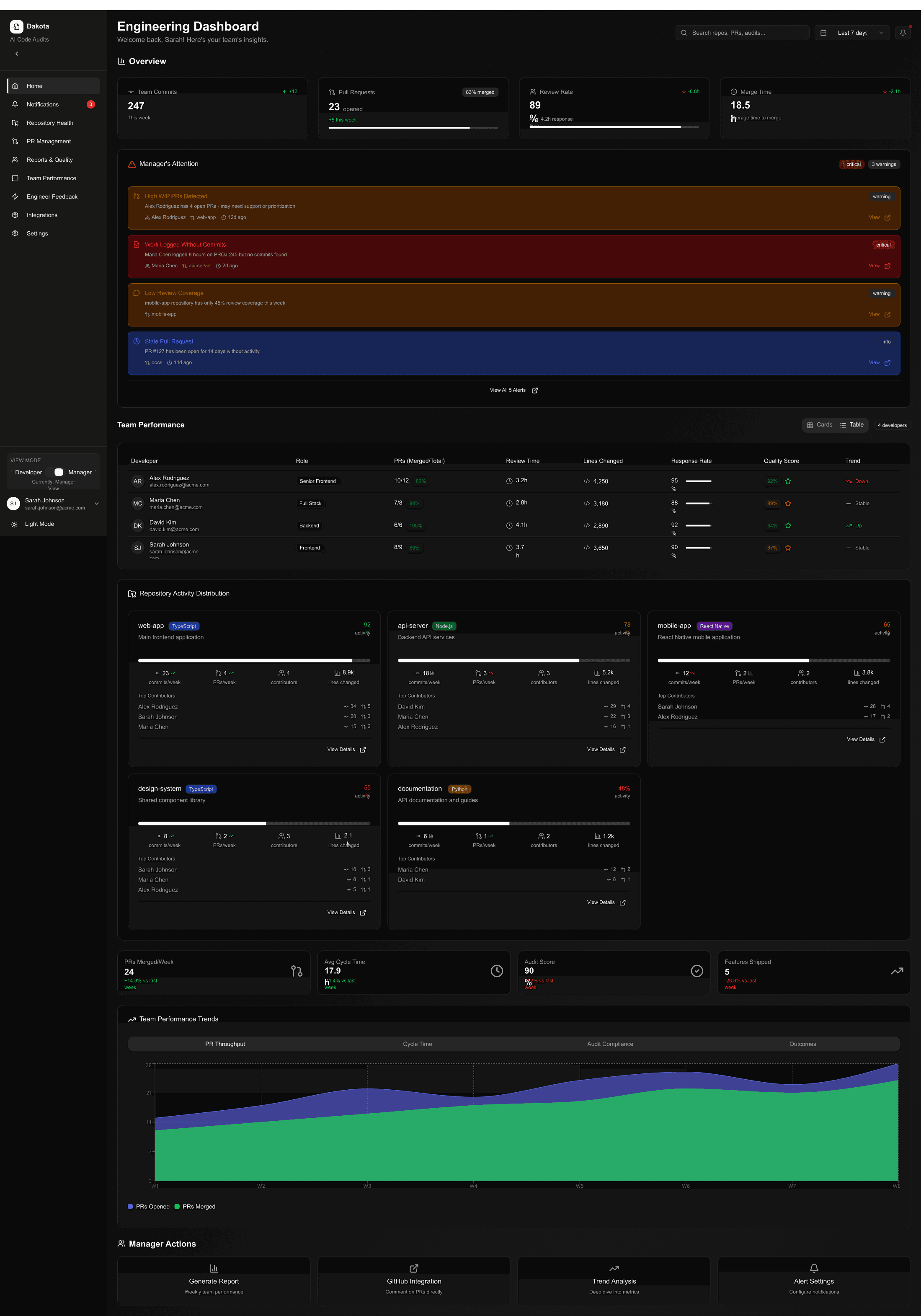

Dakota is an AI-powered code quality checker that analyzes GitHub repositories using large language models. It reviews code structure, best practices, and potential issues, then delivers clear, actionable feedback to managers

Year :

2025

Industry :

Software

Client :

Matt Culteron

Project Duration :

6 weeks

Background :

Dakota is an AI-driven code quality and review platform designed to help engineering managers gain deeper visibility into their teams’ codebases. By securely connecting to GitHub repositories, Dakota automatically analyzes source code using advanced large language models to identify quality issues, inconsistencies, and opportunities for improvement.

Instead of relying solely on manual code reviews, Dakota evaluates code for readability, maintainability, performance risks, and adherence to best practices. The insights are translated into clear, manager-friendly feedback, making it easier for non-technical stakeholders to understand the health of a project and guide teams effectively.

With Dakota, teams can reduce review bottlenecks, improve overall code quality, and ensure consistent standards across projects—while allowing developers to focus on building, not policing code.

How it works :

Dakota is an AI-driven code quality platform designed to bridge this gap. By connecting directly to GitHub repositories, Dakota automatically reviews code using large language models and evaluates it against best practices, readability standards, and maintainability principles.

Once a repository is connected, Dakota scans the codebase and generates structured feedback highlighting:

Code quality and maintainability issues

Inconsistencies and potential risks

Improvement recommendations written in plain, actionable language

Instead of raw technical logs, Dakota presents insights in a manager-friendly format, enabling faster decision-making and more meaningful conversations with development teams.

Challenge :

1. Designing for Mixed Technical Expertise

Dakota is used by both engineering managers and technical leads, each with different levels of coding expertise. One of the key challenges was presenting complex code analysis in a way that is useful for experienced developers while still being understandable and actionable for non-technical managers—without oversimplifying or overwhelming either group.

2. Translating LLM Output into Actionable Insights

Raw AI-generated feedback can be verbose, inconsistent, or overly technical. A major UX challenge was transforming this output into structured, trustworthy insights that feel clear, concise, and human—while maintaining confidence in the accuracy of the analysis.

3. Building Trust in AI Recommendations

Managers are hesitant to act on AI feedback if they don’t understand why a recommendation was made. The challenge was to design explanations and supporting context that increase trust in the system without exposing unnecessary technical complexity or internal AI mechanics.

4. Avoiding Information Overload

Large repositories can generate a high volume of issues. The UX challenge was to prioritize what matters most—surfacing critical problems first, grouping related feedback, and enabling users to quickly scan the overall health of a repository.

Impact :

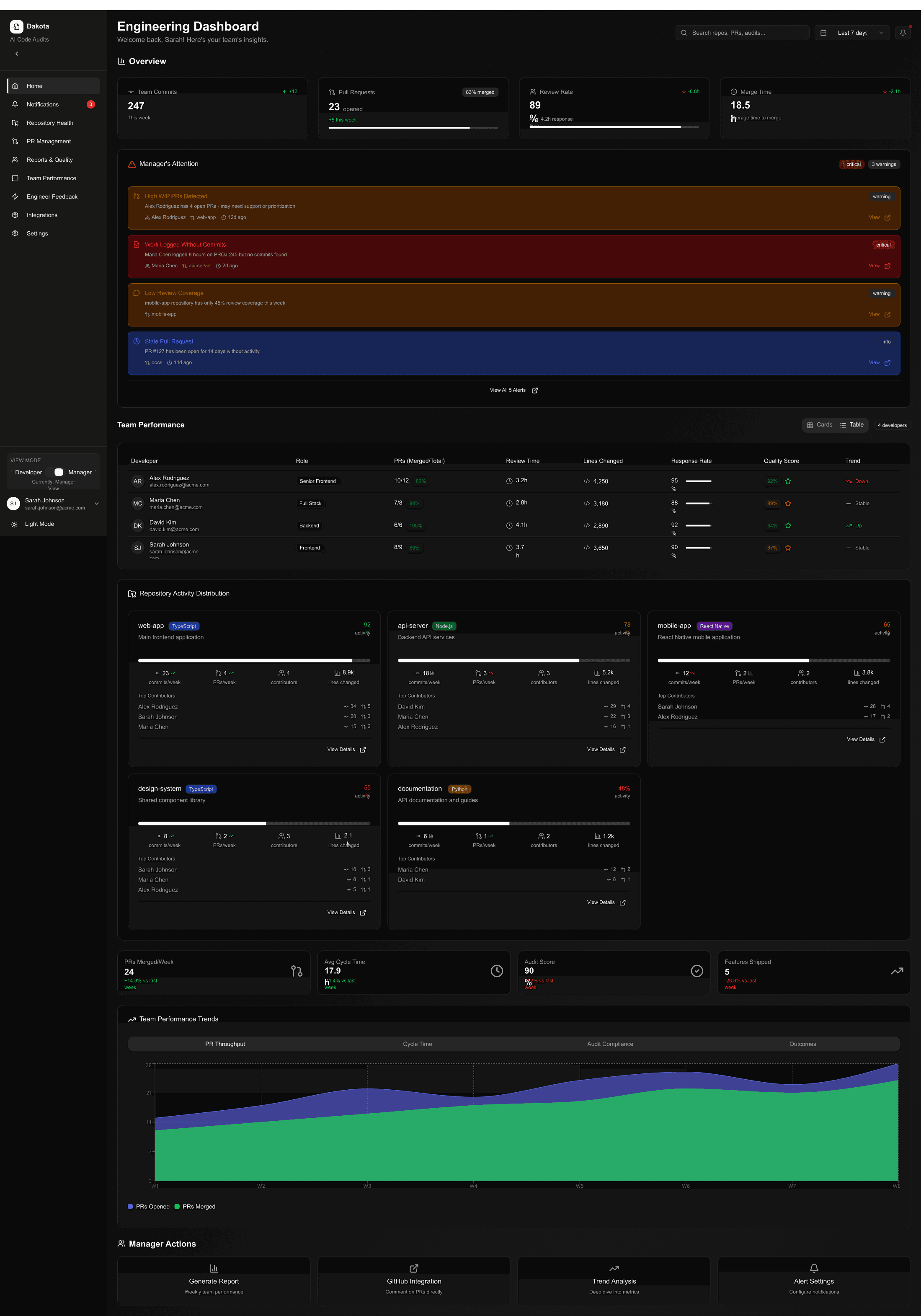

Dakota reduces the reliance on manual reviews while improving visibility into code health. Managers gain confidence in code quality, developers receive clearer guidance, and teams maintain consistent standards without disrupting their workflow.